Python and NLTK sent_tokenize

Python hosting: Host, run, and code Python in the cloud!

Dive into Natural Language Processing with Python’s NLTK, a pivotal framework in the world of data science. Unravel the techniques of tokenization and learn to efficiently process human language data using the powerful Python module, NLTK.

Natural Language Processing (NLP) is not just a buzzword; it’s an essential facet of data science in the modern era. With NLTK (Natural Language Toolkit) at the helm, Python becomes a mighty tool for dissecting and interpreting human language.

If you’re stepping into the vast field of NLP or if you’re an established linguist exploring Python’s prowess, this guide will elucidate the primary method of tokenization - the act of segmenting sentences and words from your dataset.

Recommended Course:

Master Natural Language Processing (NLP) in Python

Kickstart Your Journey with NLTK

Before diving deep, ensure you have NLTK installed. The installation methodology hinges on your Python version:

1 | # If you're on Python 2.x: |

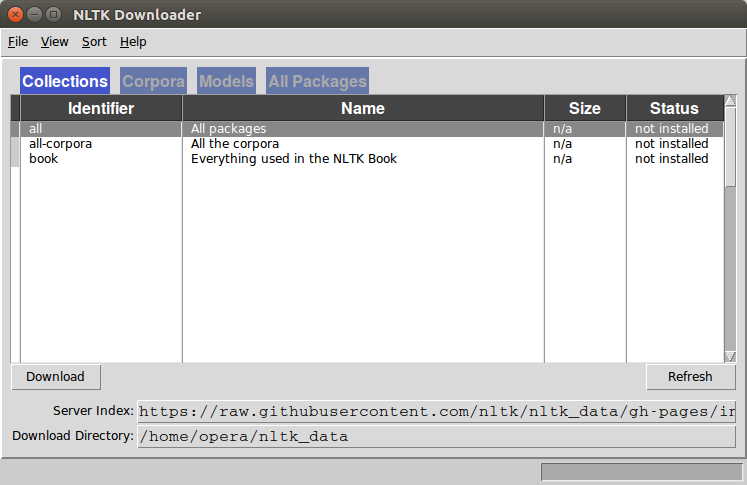

Post-installation, plunge into the Python realm and fetch the quintessential NLTK packages:

1 | import nltk |

Upon invocation, a graphical user interface will emerge. Opt for “all”, and then hit “download”. It might test your patience, so brew some coffee while it gets ready.

Demystifying Tokenization: Slicing Words & Sentences

With the NLTK arsenal ready, you’re set to tokenize text, meaning, segmenting them into words or sentences. Witness it in action:

1 | from nltk.tokenize import sent_tokenize, word_tokenize |

This will yield: [‘All’, ‘work’, ‘and’, ‘no’, ‘play’, ‘makes’, ‘jack’, ‘dull’, ‘boy’, ‘,’, ‘all’, ‘work’, ‘and’, ‘no’, ‘play’]

Now, for sentence tokenization, a subtle alteration is needed:

1 | data = "All work and no play makes jack dull boy. All work and no play makes jack a dull boy." |

This results in: [‘All work and no play makes jack dull boy.’, ‘All work and no play makes jack a dull boy.’]

Harnessing Arrays in NLTK

To streamline your operations, it’s pragmatic to compartmentalize the tokenized data into arrays:

1 | phrases = sent_tokenize(data) |

Stay tuned and elevate your skills in Natural Language Processing by exploring our extensive range of guides and resources.

Dive Deeper into NLP Topics →

Leave a Reply: