Category: vision

template matching python from scratch

Template matching is a technique for finding areas of an image that are similar to a patch (template). Its application may be robotics or manufacturing.

Related course:

Master Computer Vision with OpenCV

Introduction

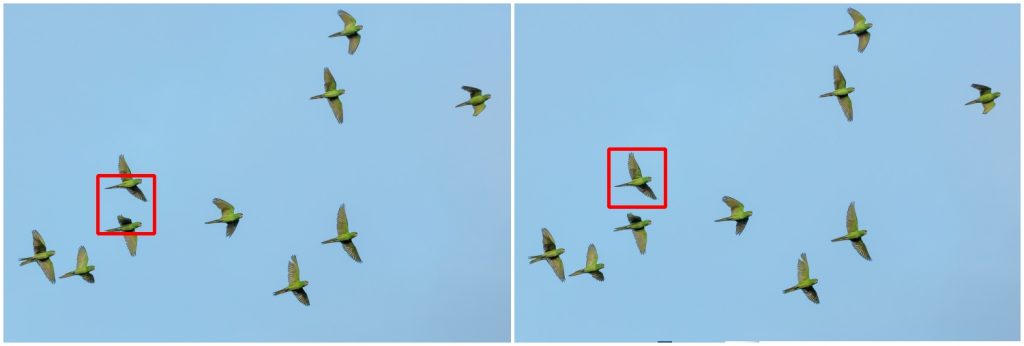

A patch is a small image with certain features. The goal of template matching is to find the patch/template in an image.

Template matching with OpenCV and Python. Template (left), result image (right)

Template matching with OpenCV and Python. Template (left), result image (right)

To find them we need both:

- Source Image (S) : The space to find the matches in

- Template Image (T) : The template image

The template image T is slided over the source image S (moved over the source image), and the program tries to find matches using statistics.

Template matching example

Lets have a look at the code:

import numpy as np |

Related course:

Master Computer Vision with OpenCV

Explanation

First we load both the source image and template image with imread(). We resize themand convert them to grayscale for faster detection:

|

We use the cv2.matchTemplate(image,template,method) method to find the most similar area in the image. The third argument is the statistical method.

This method has six matching methods: CV_TM_SQDIFF, CV_TM_SQDIFF_NORMED, CV_TM_CCORR, CV_TM_CCORR_NORMED, CV_TM_CCOEFF and CV_TM_CCOEFF_NORMED.

which are simply different statistical comparison methods

Finally, we get the rectangle variables and display the image.

Limitations

Template matching is not scale invariant nor is it rotation invariant. It is a very basic and straightforward method where we find the most correlating area. Thus, this method of object detection depends on the kind of application you want to build. For non scale and rotation changing input, this method works great.

You may like: Robotics or Car tracking with cascades.

Download Computer Vision Examples + Course

Car tracking with cascades

In this tutorial we will look at vehicle tracking using haar features. We have a haar cascade file trained on cars.

The program will detect regions of interest, classify them as cars and show rectangles around them.

Related course:

Master Computer Vision with OpenCV

Detecting with cascades

Lets start with the basic cascade detection program:

#! /usr/bin/python |

This will detect cars in the screen but also noise and the screen will be jittering sometimes. To avoid all of these, we have to improve our car tracking algorithm. We decided to come up with a simple solution.

Related course:

Master Computer Vision with OpenCV

Car tracking algorithm

For every frame:

- Detect potential regions of interest

- Filter detected regions based on vertical,horizontal similarity

- If its a new region, add to the collection

- Clear collection every 30 frames

Removing false positives

The mean square error function is used to remove false positives. We compare vertical and horizontal sides of the images. If the difference is to large or to small it cannot be a car.

ROI detection

A car may not be detected in every frame. If a new car is detected, its added to the collection.

We keep this collection for 30 frames, then clear it.

#!/usr/bin/python |

Final notes

The cascades are not rotation invariant, scale and translation invariant. In addition, Detecting vehicles with haar cascades may work reasonably well, but there is gain with other algorithms (salient points).

You may like:

Download Computer Vision Examples + Course