sent_tokenize

Python hosting: Host, run, and code Python in the cloud!

In this article you will learn how to tokenize data (by words and sentences).

Related course:

Easy Natural Language Processing (NLP) in Python

Install NLTK

Install NLTK with Python 2.x using:

|

Install NLTK with Python 3.x using:

|

Installation is not complete after these commands. Open python and type:

|

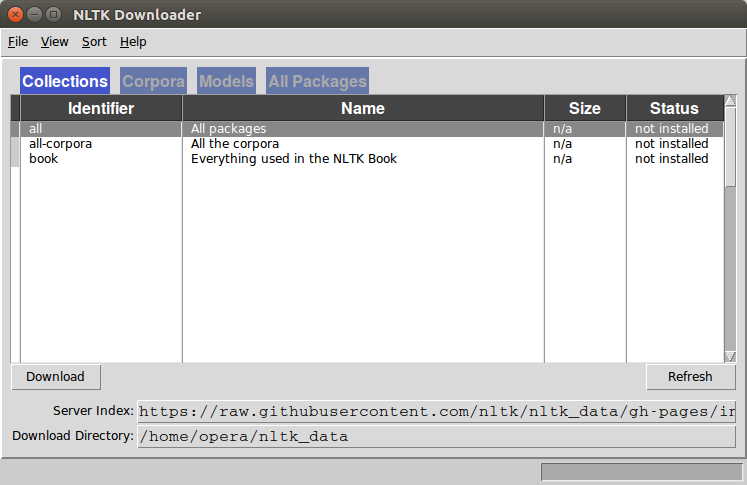

A graphical interface will be presented:

Click all and then click download. It will download all the required packages which may take a while, the bar on the bottom shows the progress.

Tokenize words

A sentence or data can be split into words using the method word_tokenize():

|

This will output:

|

All of them are words except the comma. Special characters are treated as separate tokens.

Tokenizing sentences

The same principle can be applied to sentences. Simply change the to sent_tokenize()

We have added two sentences to the variable data:

|

Outputs:

|

NLTK and arrays

If you wish to you can store the words and sentences in arrays:

|

Posted in NLTK

Leave a Reply: